2025 Wrapped: The Year In Evaluation Learning

December 2025

About the author: Stephanie Spencer is an Evaluation Associate with Three Hive Consulting and Eval Academy Coordinator. As a Credentialed Evaluator, she brings experience in mixed-methods evaluation across health, social service, and community sectors, with a focus on qualitative methods, reflective practice, and trauma-informed approaches.

This article is rated as:

As 2025 comes to a close, we’re reflecting on the topics that evaluators engaged with most this year, and what we learned through writing and sharing resources and learnings based on our work at Three Hive. Our aim stayed the same: support practical, accessible evaluation practice grounded in real-life work.

Looking Back: Key Milestones

This year, our work centred on creating practical tools and learning opportunities in response to the kinds of questions we heard most frequently from evaluators.

We added a second online course, Data Collection Methods in Program Evaluation, which offers step-by-step guidance on choosing and applying data collection methods in real projects.

We hosted our first free webinar, 3 Key Ways You’ve Been Using Likert Scales All Wrong, which addressed common questions we hear about survey design.

We shared a full week of content on the AEA365 blog, focusing on practical insights across the evaluation lifecycle, from responding to RFPs to promoting the use of results.

We added 40+ new articles and 15 new resources to support everyday evaluation tasks, from planning and data collection to analysis and reporting.

We refreshed our newsletter to make it easier to navigate and more useful for evaluators looking for quick tips, tools, and upcoming learning opportunities.

Top Articles Of 2025

Our most-read articles this year covered a mix of qualitative analysis, survey design, and planning tools:

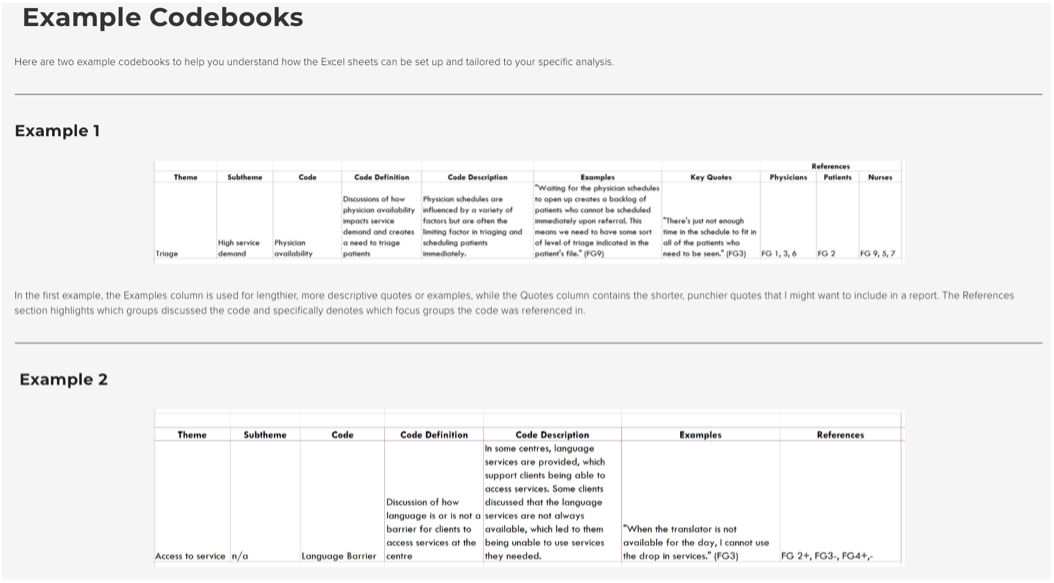

5. Creating a Qualitative Codebook

This article introduces qualitative codebooks as practical tools for organizing themes, codes, definitions, and examples, especially when multiple people are coding. It includes example structures and suggestions for what to track.

Key takeaway: A well-designed codebook improves consistency, supports team-based analysis, and makes it much easier to move from coded excerpts to clear, report-ready findings.

4. Finding the Right Sample Size (The Hard Way)

Building on our “easy way” article, this article walks through the formulas behind sample size calculators, unpacking concepts like margin of error, confidence level, z-scores, and population proportion.

Key takeaway: Even if you rely on online calculators, understanding the logic behind sample size estimates helps you make defensible choices and explain them clearly to interest holders.

3. Everything You Need to Know About Likert Scales

This article breaks down the key decisions involved in designing and interpreting Likert-type questions: how many points to use, whether to include a neutral midpoint, when to choose unipolar or bipolar scales, and what those choices mean for analysis and reporting.

Key takeaway: There’s no single “correct” Likert design, but you should make intentional choices (e.g., anchors, neutrality, collapsing categories) based on your context, audience, and analysis needs, and be explicit about those decisions.

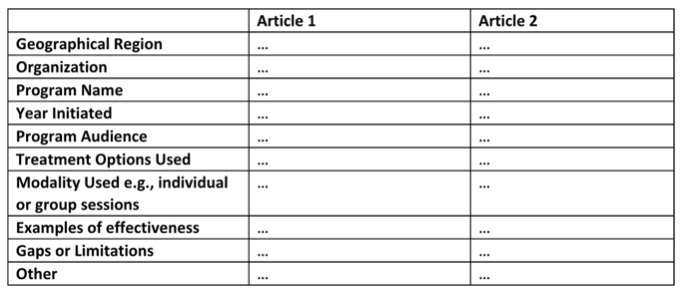

2. How to Complete an Environmental Scan: Avoiding the Rabbit Holes

This article demystifies environmental scans and distinguishes them from literature reviews, emphasizing the mix of grey literature, documents, and interest holder input. It offers a six-step approach to staying focused and avoiding endless searching.

Key takeaway: A clear purpose, focused questions, and a plan for where and how you’ll look for information are essential to avoid “rabbit holes” and produce findings that actually inform decisions.

1. Interpreting Themes from Qualitative Data: Thematic Analysis

Our most-visited article for the fourth year in a row! This article walks readers through a clear 5-step process for thematic analysis (from familiarization to reporting) and compares inductive vs. deductive approaches.

Key takeaway: Start with deep familiarity with your data, be transparent about your process, and treat coding as an iterative, learn-by-doing method rather than a one-off task.

Top Resources in 2025

These resources were the most downloaded in 2025, supporting evaluators with practical tools they could use immediately in planning, data collection, and reporting.

5. Complete Reporting Bundle

A collection of templates and tools to support report writing, layout, and structure. It includes sample sections, stylistic guidance, and prompts that help evaluators build clear, coherent, and visually consistent reports.

Why it’s useful: Streamlines the reporting process and helps evaluators focus on communicating findings rather than starting from a blank page.

4. A Beginner’s Guide to Evaluation (Infographic)

Designed for newcomers to the field, this one-page infographic summarizes foundational concepts and links to introductory Eval Academy articles and tools.

Why it’s useful: A quick, accessible starting point for students, new hires, or anyone seeking a high-level overview of evaluation.

3. Survey Design Tipsheet

This free resource offers a visual overview of common survey question types, multiple choice, Likert-type, open-ended, and includes practical tips for avoiding common pitfalls like double-barreled questions, unclear response options, or unnecessary respondent burden.

Why it’s useful: Whether you’re creating a new survey or improving an existing one, the tipsheet helps you make intentional design choices grounded in good survey practice.

2. Evaluation Question Checklist

A practical scoring tool that helps evaluators assess and refine their evaluation questions. It prompts users to reflect on clarity, feasibility, alignment, and usefulness, ensuring questions set up the rest of the evaluation for success.

Why it’s useful: Strong evaluation questions drive strong designs; this checklist makes the refinement process easier and more systematic.

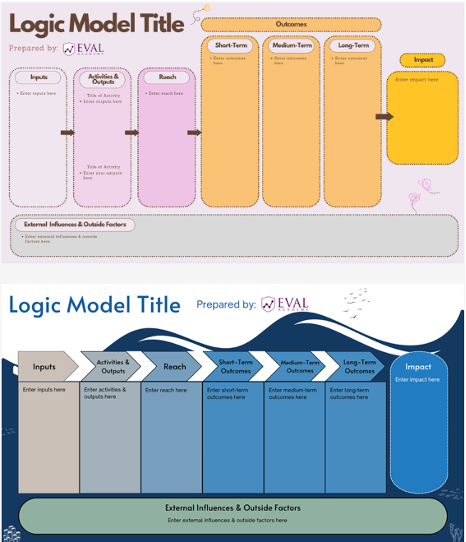

1. Logic Model Templates for Canva

This Logic Model template built specifically for Canva Whiteboard makes it easier to create logic models that can expand, shrink, and export cleanly at any size. The template uses only free Canva elements and is fully customizable, making it useful for students, program staff, and experienced evaluators alike.

Why it’s useful: A flexible, visually engaging way to build logic models without fighting formatting. Useful for presentations, reports, and collaborative planning sessions.

What’s Coming in 2026

We’re continuing to build resources and learning opportunities that support the real questions evaluators face in their work. Here’s a preview of what’s in development for the new year:

Evaluation Frameworks (new course): A foundational, plain-language introduction to frameworks, approaches, methods, and designs, designed to help evaluators choose the right model for their context.

Data Analysis: Excel Fundamentals (new course): A practical course that focuses on the Excel skills evaluators use most often, from cleaning data to running basic analyses.

Data Visualization Foundations (new course): A step-by-step guide to creating clear, meaningful charts with the tools most evaluators already have, and using visuals to communicate findings more effectively.

CES2026 Conference: We’re excited to be attending the CES2026 conference in Edmonton next June and hope to see many of you there!

More webinars: We’ll continue offering accessible learning opportunities and plan to expand beyond single events to create ongoing spaces for shared learning.

New templates, tip sheets, and infographics: We’ll keep adding practical tools that support planning, data collection, analysis, and reporting.

A short survey: We’ll be reaching out to newsletter subscribers to learn what topics and tools you’d find most useful in 2026.

If there’s something you’d love to learn or a tool you wish existed, feel free to share your ideas in the comments section below. Hearing from evaluators helps us understand what’s most useful, and it directly shapes what we build next.

Thank You!

On behalf of the Eval Academy team, thank you to everyone who read, shared, commented, downloaded resources, or attended a course or webinar this year. We’re excited to continue learning and sharing in 2026. Feel free to leave a comment below for anything you’d like to see from us in 2026!